Our team strives to enhance the role of the human in the loop at every partner organization — not replace them. We applied this approach during our recent collaborations with the New York Stem Cell Foundation Research Institute (NYSCF) to assist the foundation in using data science to optimize their innovative approach to producing induced pluripotent stem cells (iPSCs).

A laboratory at the forefront of innovative disease treatment

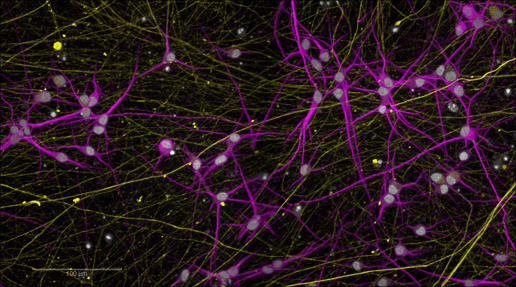

NYSCF is an independent nonprofit organization that seeks to “accelerate cures for the major diseases of our time through stem cell research.” In particular, NYSCF focuses on the production and use of iPSCs. These cells, which can be grown from anyone’s donated blood or skin samples, can be converted into any of the 200+ types of cells in our bodies, providing valuable, individualized material for research and testing disease treatments.

NYSCF has developed an innovative robotic system to produce iPSCs on a large scale: the NYSCF Global Stem Cell Array® (TGSCA™). The ability to produce iPSCs in such quantities is invaluable, not only in helping NYSCF conduct innovative medical research, but enabling others through the distribution of these cell lines through the NYSCF Repository. NYSCF has generated large panels of cell lines spanning diseases including Alzheimer’s, macular degeneration, and rare diseases. At any given time, NYSCF is processing hundreds to thousands of samples in parallel culture, 10–100X the capability you would find in most other research laboratories.

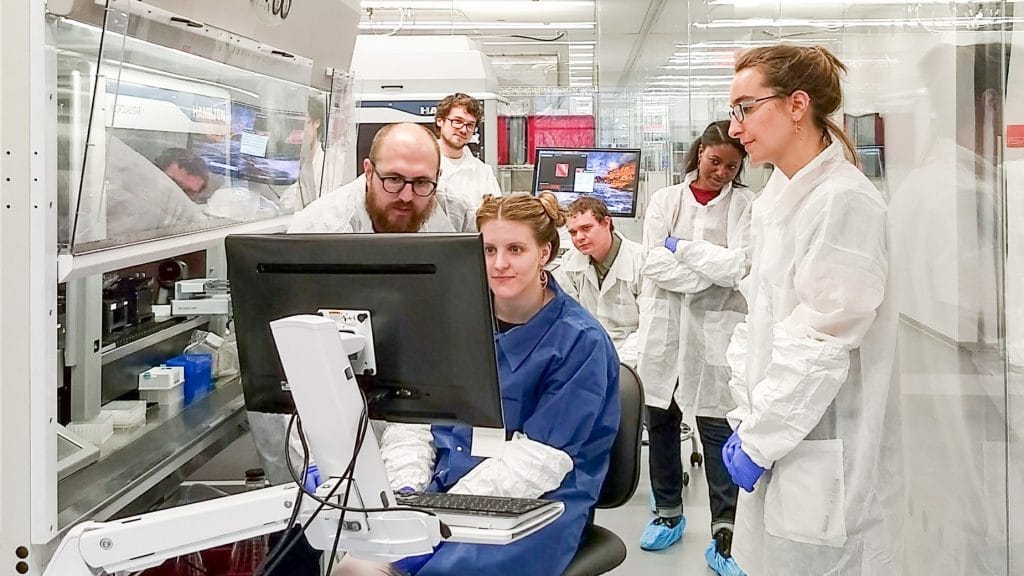

Humans in the loop

While most of the TGSCA™ is automated, there is a vital review process between experiment stages to determine if plates of cell cultures are ready to enter the next step in the cell culture process. Until now, this work has required up to an hour each day from a scientist to review the data and make a judgment call based on their expertise and experience as to whether the plate is ready to continue passaging.

“If I am working, I am going to make one set of decisions. Then the next weekend, someone else will be here and they’ll make another set of decisions,” said Daniel Paull, PhD, NYSCF Senior Vice President of Discovery and Platform Development. “If we can have a tool that guides decision-making in a very standardized way, that will reduce the variation and perhaps increase our efficiencies as well as the quality of all of the cell lines that we make.”

Additionally, on a practical level, machine availability is a crucial consideration when producing stem cells at scale, meaning any use of the robots must be carefully and strategically planned. Biology often has its own ideas on timing, however, and the rate at which cells grow can be highly variable.

To make these processes more efficient, NYSCF began working towards a single machine learning model or “oracle” that could serve as a centralized source of future state prediction and sought to determine whether such a tool could be built from its vast repository of historical data. The organization rigorously collects a rich set of biological and logistical data points during the cell culturing process, including the amount of time cells spend growing, the environmental conditions and hardware specifications during each sample processing.

The ask

NYSCF had begun exploring the concept of the optimal time to “passage” (transfer) cells at the individual well-level, developing an initial approach to predicting potential well transfer times in half-day increments. To continue this work that was first developed by students in the NYSCF internship program, NYSCF approached Data Clinic for data science support.

NYSCF’s initial asks were two-fold:

- Could we identify ideal characteristics of plates ready for passage?

- Can we use that information to predict the optimal time for passage several days in advance?

“The Data Clinic team is doing outstanding work with our researchers to optimize our studies, and it is a true testament to the power of data science for improving research, and in turn, outcomes for patients,” noted Rick Monsma, PhD, NYSCF Senior Vice President of Scientific Operation. “Understanding when to optimally passage cells also gives scientists back a bit of their most valuable asset: their time. We were excited to hit the ground running on this project with Two Sigma, and we have already learned a great deal thanks to their outstanding models and commitment to collaboration.”

Data challenges

As with any project, we spent a lot of time with the engaged team at NYSCF, getting to know the extraordinary work they do and how they do it. We needed to understand the lifecycle of the samples/plates throughout the TGSCA™, the tasks and roles of the researchers, how this work fits into NYSCF’s bigger picture, and what data is collected and when throughout the process.

When considering the characteristics of plates passaged at the ideal time, we needed to have ground truth or a “set of truth.” Defining criteria around the optimal time to passage cells allowed us to delve into datasets measured in the millions of rows that NYSCF had robustly and uniformly collected since early 2013.

Predicting passage time

Using a training set from NYSCF’s data, our team of volunteers set forth investigating three different modeling approaches:

- A naïve logic-based approach to set a baseline

- A survival model

- A Bayesian model

The baseline model was simple. Once a well hit a defined confluence, the ideal passage time would fall within a range of hours later. Not only did this model help us set a baseline, but it also helped us assess if the complexity of the following models ultimately added value.

Next up was the survival model, which is typically used in the medical field. This methodology is generally used to assess the likely amount of time to an event. Under the surface, it’s essentially producing the probability of the event happening over time. We had to apply this twice: once for the start of the optimal transfer window and once for the close of the optimal transfer window. The mechanics and complexity of this approach allow for fine-tuning to reach a precision and recall ideal for its application, with its tradeoff being more demanding compute needs whenever it needs to be retrained.

Finally, the Bayesian model is an approach that allows for real-time adjustments in response to new data. Using the fundamental concept of cell growth rate, the Bayesian approach essentially predicts the confluence level at every point in time, given all the confluence levels captured thus far. The benefit of this approach is that it quickly adapts to new observations after data capture errors, which can be an advantage in real-time applications.

Comparing performance

When it came to cross-model comparisons, we chose to assess ultimate performance using each model’s f-score, the harmonic mean (a numerical average typically used when finding the average of rates) of the precision and recall of a model. In this specific case, a high precision meant that we reduced the chances a well would be transferred too early (not enough cells), and a high recall meant that we reduced the chances a well would be transferred too late (it will have differentiated). F-scores range from 0 to 1 (1 being perfect precision and recall).

The Bayesian and Survival models had strikingly similar f-scores, with slight variation in the strength of precision vs. recall. For something so straightforward, the baseline model performed surprisingly well on precision, but its low recall meant that a large number of cell lines would likely remain in wells for too long. In the end, the added complexity of the other two approaches would indeed be worth the investment to ensure higher quality samples.

Putting it into practice

NYSCF’s research team was eager to test both models live on their system to make the ultimate decision between the Bayesian and Survival models. With both codebases in hand, NYSCF is currently implementing both approaches, first testing the models on real-time data and then conducting comparative analyses. In parallel, NYSCF is simultaneously capturing feedback from researchers on whether they agree with the model’s call to begin developing a novel ground-truth dataset. We look forward to putting our heads back together with NYSCF to assess the performance of both approaches, as well as their experience managing the pipeline.

Part II teaser

How exactly do the technicians review the state of iPSC growth in some of these experiments with the TGSCA™? The NYSCF team not only built their own proprietary robotic system, but also layered on a custom laboratory information management system (LIMS) that has allowed researchers to collect and interact with data and images through custom desktop and web-based applications.

Look out for our next blog in this series to learn about a tool we designed to interact with data generated by NYSCF’s Monoqlo℠ system, and hear how our team of volunteer engineers collaborated with NYSCF on improvements to their software solution so they can seamlessly adapt to their expanding resources.