The International Conference on Learning Representations (ICLR) is is one of the “Big 3” international machine learning conferences (along with ICML and NeurIPS). ICLR is steadily gaining a reputation for the cutting-edge research presented there each year, with a particular focus on deep learning, as well as other areas of artificial intelligence, data science, and statistics.

Two Sigma is always eager to stay abreast of the latest machine learning research–and to contribute back to the community when we can. As in past years, we sponsored ICLR 2019, which took place in New Orleans, and several Two Sigma researchers and engineers were in attendance. Below, they highlight a handful of particularly insightful papers, talks, and presentations that may be of interest to practitioners in many different fields.

Special thanks to Rachel Malbin, Kishan Patel, Mike Schuster, and Rhys Ulerich for their contributions to this write-up.

Learning without a Goal

Task-agnostic reinforcement learning received a lot of attention at ICLR 2019, with a workshop dedicated to recent advancements in the field.

Recent works have focused on tying experiences learned without a goal to an action plan when a goal is specified, and to efficient exploration while the agent is exploring a space in free-play.

- Skew-Fit by Vitchyr Pong et al. optimizes state coverage by training a generative model to pick a diverse set of goals for the agent to reach. Over iterative improvements, the generative model approaches a uniform distribution across all reachable states.

- In State Marginal Matching with Mixtures of Policies by Lisa Lee et al., the model seeks to maximize marginal state entropy, as well as mixtures of state distributions, to accelerate exploration and generalization on unseen tasks.

More Efficient Exploration for Reinforcement Learning

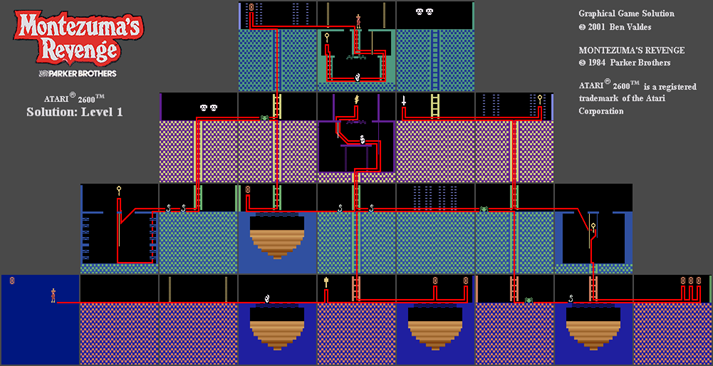

While, in recent literature, reinforcement learning has seen strong performance in environments with dense rewards, games with either very sparse rewards or a single final reward have proven more difficult to learn. Montezuma’s Revenge has been one such difficult game for RL agents to learn, as there is only a single final reward, and the agent has to traverse back to rooms it has already seen to pick up key components.

- Recent work from OpenAI by Burda et al. has achieved record-breaking performance on Montezuma’s Revenge by providing the agent a reward for “curiosity,” or exploring states it has not seen before.

- Previous curiosity algorithms suffered from the “noisy TV problem,” in which agents end up stuck in a loop and receive large rewards from a stochastic change in the environment.

- The paper from Burda avoids the noisy TV problem through random network distillation, where the net produces a deterministic random feature vector for a given state and sidesteps a noisy transition.

The potential benefits of such research aren’t limited to video games, of course. It may help improve agent robustness in a variety of settings with uncontrolled environments (e.g., driving).

End-to-End Optimization with Reinforcement Learning

Recent advancements in reinforcement learning tuned outer loop hyperparameters as a meta-learning task. In these problems, an RL agent receives a reward based on the performance of some inner loop, and then modifies the hyperparameters of the inner loop problem to further maximize its reward.

- Reinforcement learning has been incorporated as a meta-learning loop for finding optimal net architectures, such as in Xie et al.

- Ruiz et al. explored using RL to modify hyperparameters of a simulator to produce different distributions with the goal of maximizing performance on an external validation set

Inspiration Drawn from Differential Equations and Max-Affine Spline Operators

Researchers in deep learning continue to incorporate well-known results from other disciplines:

- FFJORD by Grathwohl et al. builds atop Neural ODEs and Hutchinson’s trace estimator to produce “a continuous-time invertible generative model with unbiased density estimation and one-pass sampling, while allowing unrestricted neural network architectures.”

- AntisymmetricRNN by Chang et al. achieves outperformance vs. classical LSTMs on long-term memory tasks by constructing an RNN resembling a stable ODE.

- Analysis based on max-affine spline operators (MASOs) appeared in work by Balestriero and Baraniuk (pertaining to probabilistic Gaussian mixture models) and in work by Wang et al. (pertaining to RNNs).

Continued Refinement of the Great Tricks

Researchers continued to better understand and automate many common approaches. For example:

- Zhang et al. studied what exactly is the regularization effect provided by weight decay.

- Luo et al. proposed “Switchable Norm,” which automatically behaves like the best of layer, channel, or mini-batch normalization.

Sophisticated Analysis of Training Processes

ICLR 2019 featured work, including a best paper, on characterizing how networks learn:

- Achille et al. quantified how image classification networks respond to temporary “visual defects” during the training process.

- The Lottery Ticket Hypothesis showed that, inside deep networks, both the connection structure and the initialization of sparse subnetworks are critical to achieving high accuracy.

These findings are part of the field’s ongoing aim to speed training and reduce inference costs.

Domain-Aware Loss Function Construction

Several researchers bested prior state-of-the-art performance by incorporating more domain knowledge into their training processes.

- In language-translation tasks, Wang et al. found combining many, e.g., English→German and German→English agents within a dual formulation to be helpful.

- Improving prior work in geometrically-inspired embeddings for learning hierarchies, Li et al. annealed the compactness of their concept representations and demonstrated the approach’s utility in an imbalanced WordNet setting.

Enabling Factorized Piano Music Modeling and Generation with the MAESTRO Dataset

Hawthorne et al. of the Google Magenta group have built a massive public dataset for MIDI music that has been recorded by semi-professional pianists. This is a great database for many kinds of sequence learning problems, not just music.

- The group built a WAV2MIDI2WAV system using Tensorflow that allows researchers to go from waveforms to Pianoroll (live MIDI format) and back again to waveforms.

- Some music is learned by a specific transformer architecture and then sampled from the system to get closer to producing actual, coherent music.