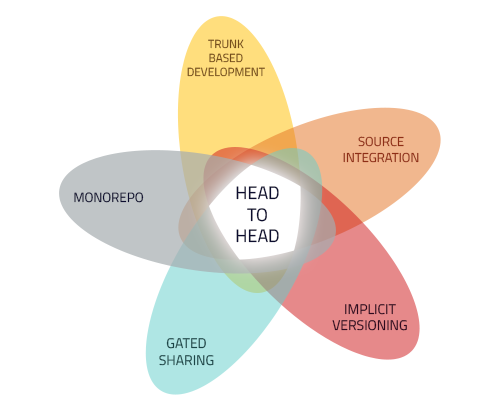

With hundreds of developers working on thousands of interconnected components, Two Sigma needs a software versioning and release system that’s both scalable and stable. In Part 1 of this series, Head of Software Development Life Cycle, Nico Kicillof, explained the rationale behind the process we call “head-to-head development.” Here, in Part 2, he examines some of the challenges that organizations implementing a similar approach may face.

Challenges with Head-to-Head

As numerous as the benefits of head-to-head may be, the solution is not without its challenges.

Scalability Challenges

Organizations following head-to-head may find it difficult to keep up with growth in developer headcount, number of components, and increasingly complex dependencies:

- Performance: Sharing gates need to be fast, as they have a direct impact on code velocity. In most cases, systems must be able to build and test large amounts of code in a very short timeframe (usually seconds).

- Dependency Granularity: A development system’s scalability can be dramatically affected by how accurately dependencies can be specified between software elements in different projects. At the coarsest level of granularity, components declare a dependency on other components as a whole. On the other end of the spectrum, dependencies can exist on specific operations or even files in another project. Coarse-grained dependencies are simpler to maintain for end users, but they can decrease system performance by reducing incrementality (see below). Some systems implement heuristic approaches based on tracking previous runs or performing static analysis to infer fine-grained dependencies from coarser, user-declared ones.

- Dependency Types: Scalability can also be affected by the types of dependencies available within a system. For example, a system does not need to distribute files that are only required at build time to hosts where tests or production software will be running.

- Monorepo tooling: Most version-control systems cannot scale to hold all the code maintained by a large organization while still performing adequately. For example, Google reported the size of its monorepo in January 2015 as containing 2 billion lines of code in 9 million source files with a history 35-million-commits deep. Enabling developers to interact with huge repositories at reasonable speeds is generally achieved through a combination of features that aren’t offered by off-the-shelf source control management systems (e.g., shallow, narrow and sparse cloning). See, for example, Microsoft’s efforts to extend Git to manage large repositories.1,2

- Change Serialization: When performing trunk-based development against a monorepo, commits from developers across the whole organization land on the same central branch. Head-to-head requires each change to be validated for sharing gates on top of its predecessor, and re-validated if another change makes it to the trunk while the previous validations were taking place. Although, in theory, multiple heads could coexist and later be merged, this would introduce complexities when developers attempted to update their local copies of code to a state containing all commits accepted up to that point. For example, the validation of one change might need to block on the validation of another one, or both could be allowed to make independent progress, but one would need to be restarted if the other one completed first. These requirements can limit the development system’s concurrency.

Incrementality Challenges

Incrementality is a common technique used by development systems to satisfy expectations around responsiveness and code velocity. Incrementality is essential to the head-to-head approach and has consequences beyond scalability. Tools can provide different degrees of incrementality along several dimensions:

- Locality

- Local incrementality is restricted to one machine. Building exactly the same code twice on the same machine, for example, often results in a virtual no-op the second time.

- Global incrementality extends this behavior to a distributed system. Building code that has already been built anywhere on any machine in the system is practically instantaneous.

- Affinity can be a compromise to accomplish limited global incrementality with locally incremental tools. The system will try to assign work to a host where it was likely performed before, thus leveraging local incrementality.

- Granularity

- Project-level incrementality avoids re-work within a project when no files have changed, but might end up redoing intra-project steps due to unrelated changes to project files.

- Command-level incrementality avoids running a tool (e.g., compiler) on inputs that have been seen previously, but often reprocesses files that haven’t changed when another input file is modified.

- File-level incrementality reuses the output of a previous execution to only generate deltas in subsequent executions.

- Detection

- Timestamp-based incrementality compares modification dates of input and output files by querying file-system metadata. Besides being the brittlest change-detection method (since timestamps can change without contents changing), this approach limits the search space to the last run only. Timestamps are also of little use in global-incrementality scenarios.

- Content-based incrementality locates previous runs based on (a hash of) the contents of the files about to be consumed. When compared to timestamp-based incrementality, this offers a compromise between the time it takes to compute content hashes and the time saved by preventing more reruns.

- Structural incrementality is similar to content-based incrementality, but only considers the contents of source files (not of intermediate artifacts) that transitively go into an operation. One advantage of structural incrementality over content-based incrementality is that the whole graph of transformations that will need to be performed due to particular code change can be computed in advance. A disadvantage is that content-based incrementality stops the propagation of a change if it doesn’t affect the result of a transformation (for example, if only comments were changed or if implementation details were changed but not interfaces).

Stability Challenges

- Reproducibility: There are multiple reasons for operations performed by a development system to be fully reproducible.3 This desired characteristic of development tools becomes especially critical in a head-to-head development setting, where incrementality is of paramount importance. In particular, the more often sharing gates (validations) yield the exact same result, the higher the level of incrementality and blame attribution.

- Consistency: In a head-to-head world, unreliable gates can have worse consequences than consistent false positives or negative results. In particular, a lack of consistency affects velocity, causes frustration in users and hinders blame attribution. Notice that consistency can be broader than mere functional reproducibility. For example, re-runs of a test can encounter timeouts that were not reached before.

- Infrastructure Reliability: Infrastructure failures and bottlenecks are particularly problematic in head-to-head development, since more parts of the system are involved in basic operations, such as allowing code to propagate.

Cultural Challenges

- Gate Overloading: Sharing gates are intended as a first line of defense; they ensure code is ready to be used by the rest of the organization. They are also very effective at preventing technical debt accrual and guaranteeing blame attribution.With this power come some possible concerns. For one, the convenience of gates can make it tempting for developers to use them as dumping grounds for all kind of validations, some of which don’t belong. Just a few possible examples: long-running tests, multiple build configurations, tests that depend on more than one version of one component or that access resources outside the development system. Conversely, developers may mistake gates for all the integration testing needed to release software. This is usually an error, since sharing gates have very specific limitations and will likely provide insufficient proof that software will run correctly in a production environment. Offering a continuous integration / continuous delivery (CI/CD) solution as part of the system supporting head-to-head development sends the right message by making it clear that there are different types of validations with different purposes. However, this doesn’t solve the problem that post-sharing validations (especially those not run on every individual change) are not as effective at preventing technical debt creep and assigning blame as their pre-sharing counterparts.

- Shared Code Ownership: A team introducing a breaking change to a component is in the best position to understand how the old and new interfaces and behaviors differ. Accordingly, these developers are often best positioned to amend affected code in their downstream dependents. While they might need help (and certainly a code review) from the parties directly responsible for maintaining the downstream code, shifting the onus to these other teams causes a set of problems, beginning with the limited incentive they usually have to to do the work. In consequence, an organization following head-to-head development must apply a policy of shared code, where engineering teams play the role of stewards rather than sole owners of their components.

- Dependency Trust: As a sort of counterweight to shared-ownership policies, head-to-head works best when there is an understanding between participating members that teams can be trusted to manage the components they’re in charge of. Internal consumers are expected to trust producers to provide the right thing. Consumers are also expected to pick up upstream changes as they are published. If they aren’t satisfied with the quality of their dependencies or don’t agree with their direction, they can take up their concerns directly with their counterparts or follow other escalation channels that aren’t typically available when independent parties are collaborating. Dependency trust fosters reuse and reduces duplication of effort. It isn’t cost-effective for a single organization to invest in developing and supporting different solutions to the same problem, or even multiple versions of a component.While this might be seen as a lost opportunity for innovation, the truth is that no matter how large an organization, the size of its internal marketplace can hardly reach a critical size where “buyers” will make the right decisions.

- Test Coverage: One of the advantages engineers working on internally-used components have is that their customers are their coworkers. Thanks to tests protecting their dependents, they can identify and work with all consumers affected by a change in order to restore interoperability. This allows them to be agile and reduce their time to market. Without the proper tests, though, this advantage can be lost as changes go into the single trunk and clear sharing gates, but can no longer be trusted to leave the code tree in a healthy state. Fortunately, the incentives for component owners to put in place tests that ensure critical functionality are high, as these tests automatically shield their software from being negatively affected by upstream changes.

Scenario Challenges

Head-to-head fosters code reuse and enables joint evolution of library-type dependencies for production-quality homegrown software. Scenarios that don’t match this description pose challenges to the approach. Here are a few examples:

- Third-Party Dependencies: External software leveraged for internal development has to receive special treatment in a head-to-head environment. For starters, it can’t be held to the implicit versioning standard, because it almost invariably contains references to versioned dependencies (this is especially true for open source software). External projects are also often ill-suited to be built by a distributed system for several reasons: They rely on intricate build logic that doesn’t adhere to sound build-reproducibility principles, they contain hidden platform dependencies, and sometimes they require complex environments to be installed on build machines. Importing external components as binaries or picking them up during builds is not always possible due again to platform dependencies, relocation limitations, and potential diamond dependencies. In addition, external code is maintained by third parties and open communities, which complicates the process of adapting it to systemic changes.

- Service Dependencies: Given two components in a producer-consumer relationship, there are some fundamental differences between an interface that makes a (local) procedure call into a library vs. a remote service call. The most relevant from a development system perspective is the inversion of control that causes the caller and callee to depend on a common interface for their builds, rather than the caller depending directly on the callee. The actual functional dependency is normally established at runtime. In order to provide robust validations of service integration, development systems need to support static declarations of service dependencies. These dependencies can (and should) be used to ensure compatibility of component versions that coexist at head. As explained in the above discussion about integration, these validations aren’t sufficient to declare services ready to be released. Since services are rarely deployed in synchronized cycles, they also have to be tested against multiple versions of their counterparts, which typically must occur outside sharing gates.

- Prototyping: When it comes to its effect on innovation, head-to-head can be a double-edged sword, depending on the position the components occupy in the software stack. Innovation at the bottom or middle of the stack benefits from the added code velocity that head-to-head provides. Developers can make a breaking change to their interfaces or behaviors and easily propagate it to all consumers, all of whom are protected by sharing gates. On the other hand, head-to-head can slow down innovation at the top of the stack, because engineers working there might need to frequently adapt to upstream changes, where explicit versioning would allow them to stick with stable versions of their dependencies and focus on their own code. One way to mitigate this issue is to provide prototyping environments where experimentation can occur. These environments can also be much more flexible about references to third-party software. Clearly, code in the prototyping space must pass through gated sharing before it can be merged into the head-to-head world.

- Open-Source Contributions: Organizations that heavily leverage open source software projects for internal development often find opportunities to contribute back to those same projects. Designing internal packages so that they can be upstreamed is a sound development practice. A successful upstreaming reduces technical debt by avoiding the need to continue patching future versions of the project. The impedance mismatch between internal and external tools and processes can pose a challenge when round-tripping changes to an open source project contributed to or even initiated by an organization that applies head-to-head development.

- Secure Code: Most organizations have portions of their code that need to be more protected than others (business-critical IP, tented projects, etc.). Access to these projects is often restricted to a subset of the organization’s developers. In principle, this requirement contradicts some of the basic tenets of head-to-head. Developers changing (non-secure) dependencies of secure components should not only be able to check that the changes they make don’t break their dependents, but should also have access to the latter’s code to troubleshoot a break (and should even feel entitled to adapt it).

Automating Head-to-Head

Two Sigma has developed a proprietary system that provides tooling support for head-to-head. The system is called VATS (Versioning at Two Sigma). It includes monorepo support on top of Mercurial, enabling users to work on narrow clones of the code they are focusing on, while enabling atomic commits across multiple components. The monorepo contains more than 6,000 projects, approximately half of which are internally developed, encompassing some 200 million lines of code. The other half is imported third-party software.

VATS runs distributed builds and tests on a farm processing more than 20,000 builds per day, for a total build footprint of 250,000 components daily. Over 100,000 pre-push tests are run every working hour with peaks of 300,000 an hour. Median time for an incremental build of the whole codebase is 25 seconds; 79 seconds for an incremental test run.

VATS offers a number of advanced features, including support for development across programming languages and the ability to make artifacts from distributed builds available for execution on any machine in the company. Artifacts are also exposed through a distributed file system backed by an object store, which allows on-demand retrieval by build workers, test workers and engineering workstations.

While VATS effectively addresses some of the head-to-head challenges described above and mitigates or works around others, it does lose the battle against a few of them. For one, it follows a closely coupled, monolithic design that ties concerns that should be orthogonal. For example, a first class entity called a “codebase” is the (coarse-grained) atomic unit of dependency, version control, build, test and deployment. The resulting system is harder to extend and maintain than we would like. Altering VATS to better address the head-to-head challenges it doesn’t adequately solve would amount to re-writing it from the ground up and would require a significant engineering effort that Two Sigma’s SDLC team is not staffed to face on its own. Instead, we are rebuilding the system in place through a process we call “unbundling.” This process consists of replacing functionality piecemeal with best-of-breed open-source tools that we integrate and extend with code that we develop. Where this in-house code is not specific to our environment, we contribute it back to the open source community. Examples of these tools are Git for source control and Bazel, Google’s internal build system that has recently been open sourced.