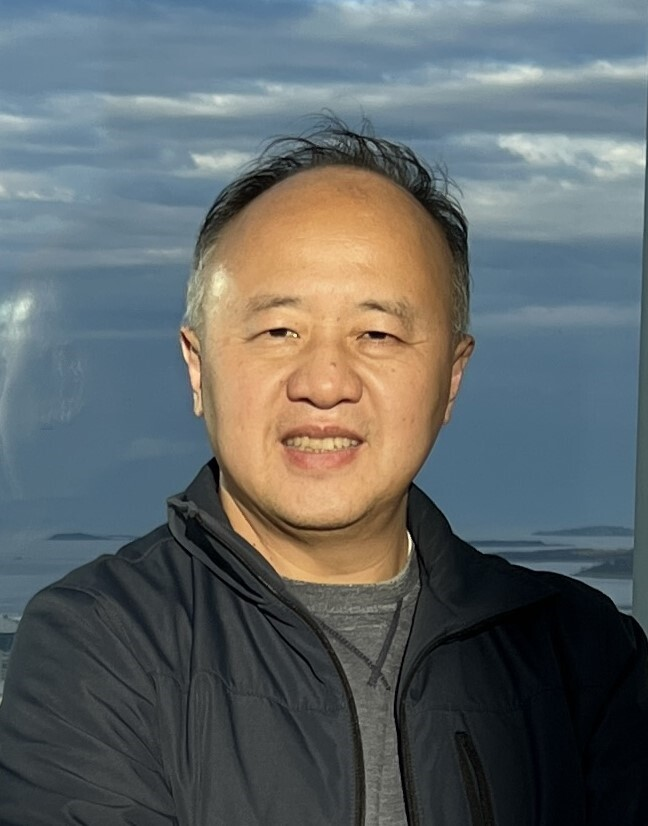

Two Sigma has a long history of working with leading professors from top universities via ongoing consulting, sabbaticals, research awards, and full-time employment in the firm.

Dr. Jun Liu, a Professor of Statistics at Harvard University, has been partnering with Two Sigma on a variety of projects since 2015. He received his BS degree in mathematics in 1985 from Peking University and Ph.D. in statistics in 1991 from the University of Chicago.

He and his collaborators introduced the statistical missing data formulation and Gibbs sampling strategies for biological sequence motif analysis in the early 1990s. The resulting algorithms for protein sequence alignments, gene regulation analyses, and genetic studies have been adopted by many researchers as standard computational biology tools.

He has also made fundamental contributions to statistical computing and Bayesian modeling. He pioneered sequential Monte Carlo (SMC) methods and invented novel Markov chain Monte Carlo (MCMC) techniques. Dr. Liu has also pioneered novel Bayesian modeling techniques for discovering nonlinear and interactive effects in high-dimensional data and led the developments of theory and methods for sufficient dimension reduction in high-dimensions.

We recently asked Dr. Liu about his work at Harvard and with Two Sigma.

What is your area of research?

I am a Professor of Statistics at Harvard University, with a courtesy appointment at Harvard School of Public Health. I received my BS degree in mathematics in 1985 from Peking University and Ph.D. in statistics in 1991 from the University of Chicago.

I have been working on Bayesian modeling and Monte Carlo computation with an application focus of bioinformatics for most of my career. I have also been quite fascinated by the challenges of finding principled ways of estimation and uncertainty quantifications for complex models.

Philosophically I am quite Bayesian – I always use Bayesian principles to guide me to solve problems in any practical setting (although the final solution may not appear in a typical Bayesian form). But practically, the Bayesian method is too “self-contained” (even though it is a perfectly self-consistent system), and I want to have sanity checks from some external measures, such as frequentist properties. I thus take up similar practical Bayesian views as my friend and mentor Donald Rubin and my PhD advisor Wing H. Wong.

Your work has been widely influential across theoretical and applied statistics, from Monte Carlo sampling to cancer treatment to, more recently, statistical control. Can you briefly describe your work in false discovery rates?

This work was motivated by a junior colleague of mine, Lucas Janson, a brilliant young researcher. He worked with his advisor Emmanual Candes on the development of knockoff methods for false discovery-rate (FDR) controls in regression problems. I thought it was a very clever method, but it requires one to have a complete knowledge (full model) of the joint distribution of all the features, which is clearly quite impossible in many applications.

Thus, I started to talk to my students, encouraging them to think of a simpler and more robust approach from the angle of data disturbance and stability. So one idea is to add noise to each feature directly to see how that can impact the results, and this idea leads to our development of the “Gaussian mirror.”

For me, the attraction of this problem is that it is very fundamental and lies right at the middle of the Bayesian-frequentist divide.

Another idea is to do data splitting, and contrast estimates from the two randomly split parts of the data. Currently we are trying to combine some Bayesian ideas and methods together with FDR controls so that both prior information can be used and FDR can be controlled in the frequentist sense. For me, the attraction of this problem is that it is very fundamental and lies right at the middle of the Bayesian-frequentist divide.

On the one hand, if we really know the true distribution of the parameters (e.g., regression coefficients), and the data are indeed generated from the posited model, then the Bayesian solution is optimal for FDR control – it also achieves the perfect local FDR control (i.e., its posterior probability of being a “discovery” is really its own “true discovery rate”).

On the other hand, if we have to estimate something or our prior is not exactly the same as the truth, the Bayesian solution does not control FDR. We are seeking an optimal balance between the Bayesian optimality and the frequentist goal (of controlling FDR).

Does the explosive growth in modern deep learning, especially LLMs, make FDR more important and relevant, or more difficult to do accurately? More generally, how can AI stay disciplined and retain statistical power in its claims?

This is an interesting and challenging question. Conceptually, FDR control is more related to interpretability and scientific decision-making related to features used in a prediction task. Researchers have successfully extended the knockoff framework to DNN models, and there have been some recent developments in conformal and model-free inference that can help discipline various ML tools.

In fact, the great strides achieved by the model AI are aided not only by large data and tremendous computing power, but also by the adoptions of a few fundamental statistical ideas, such as cross-validation (always using testing samples to guide the training), latent structure modeling (such as variational autoencoder), and integration (following the way statisticians treating missing data).

I think it is also important to associate appropriate uncertainty measurements to a prediction algorithm, although sometimes it is difficult to do. FDR controls and conformal inference are our current efforts towards this goal, and I personally would like to be more Bayesian along this direction.

How does collaborating across academia and industry add to your research?

Such collaborations have been very inspiring and stimulating for me, and they also provide me with philosophical foundations and motivations for formulating my research problems, i.e., they “ground” me.

In earlier years (say, 15 years ago), I mostly collaborated with some biopharma companies and general consulting companies, trying to help analyze their genomics and biomedical data.

More recently I have been collaborating with Two Sigma on analyzing financial data and evaluating various learning and prediction algorithms. These efforts help me focus on more practically relevant problems, and remind me all the time to think how my collaborators and other practitioners may be able to understand and use the tools I developed.

You’ve collaborated with Two Sigma for more than nine years. Can you elaborate on the partnership and why this is important to you?

I mainly have worked with two statistical groups at Two Sigma, and I have been very inspired by the high research levels of my collaborators here, who follow the most recent developments in both statistics and machine-learning areas very closely.

I have been very inspired by the high research levels of my collaborators here, who follow the most recent developments in both statistics and machine-learning areas very closely.

I am also a lot closer to data and more concerned with applicability (of a statistical method) than before. On the other hand, my research expertise in statistics has also helped make some of the heuristic methods suggested and explored by my collaborators more rigorous, systematic, and sometimes more general and enriched.

What, outside of your work, has sparked your curiosity recently?

Interesting question. Our family recently got into squash playing, especially trying to train my 10-year old early, as he seems to have some natural ability in playing it. So I got interested in how the ranking and rating systems work for squash players all over the world. A player’s rating score is based on a statistical model (a logistic-regression type) that takes into account all the participating players’ records in an iterative fashion. Quite sophisticated and interesting.

Learn more

Are you an academic interested in working with Two Sigma? Learn more about our Academic Partnership programs here.